Big Data Analytics Technologies and Tools

- [Zaanse Schans, Zaanstad, Netherlands - Michal Soukup]

Big Data Science - The Future of Analytics

- Overview

Big data analytics is the process of collecting, processing, and analyzing large amounts of data. The data can come from a variety of sources, such as social media, mobile, web, email, and networked smart devices.

The main goal of data collection is to gather as much relevant data as possible. The more data, the richer the insights.

Big data analytics can help inform different business decisions. The four main types of big data analytics are:

- Descriptive analytics: Data that can be easily read and interpreted. Descriptive analytics involves parsing historical data to better understand the changes that occur in a business.

- Predictive analytics: Uses data analysis, machine learning, artificial intelligence, and statistical models to find patterns that might predict future behavior. Predictive analytics uses historical data to uncover patterns and make predictions on what's likely to happen in the future.

- Prescriptive analytics: Provides specific recommendations on what should be done better.

- Data mining: Involves searching and analyzing a large batch of raw data in order to identify patterns and extract useful information. Companies use data mining software to learn more about their customers.

- Big Data Analytics - Benefits and Advantages

Data has become a primary resource for value generation. Today, big data falls under three categories of data sets - structured, unstructured and semi-structured. Big Data Analytics software is widely used in providing meaningful analysis of a large set of data. This software helps in finding current market trends, customer preferences, and other information.

Big data analytics is the often complex process of examining large and varied data sets, or big data, to uncover information - such as hidden patterns, unknown correlations, market trends and customer preferences - that can help organizations make informed business decisions.

Big data analytics helps organizations harness their data and use it to identify new opportunities. That, in turn, leads to smarter business moves, more efficient operations, higher profits and happier customers.

Driven by specialized analytics systems and software, as well as high-powered computing systems, big data analytics offers various business benefits, including: cost reduction, faster and better decision making, new revenue opportunities, more effective marketing, better customer service, new products and services, competitive advantages over rivals, etc.

Big data analytics applications enable big data analysts, data scientists, predictive modelers, statisticians and other analytics professionals to analyze growing volumes of structured transaction data, plus other forms of data that are often left untapped by conventional BI (Business Intelligence) and analytics programs.

This encompasses a mix of semi-structured and unstructured data - for example, Internet clickstream data, web server logs, social media content, text from customer emails and survey responses, mobile phone records, and machine data captured by sensors connected to the Internet of things (IoT).

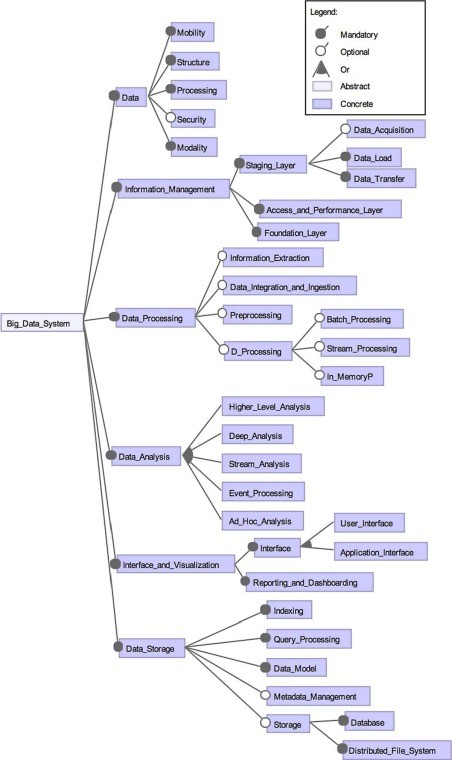

- (Feature Model of Big Data Systems - ScienceDirect)

- Main Types of Big Data Technologies

Big data analytics is the process of extracting useful information by analysing different types of big data sets. Big data analytics is used to discover hidden patterns, market trends and consumer preferences, for the benefit of organizational decision making. There are several steps and technologies involved in big data analytics.

Big data technologies can be categorized into four main types:

- Data storage: Data storage is one of several vital functions in our digital world. It is defined by a hierarchy of four levels: primary storage, secondary storage, tertiary storage, and offline.

- Data mining: There are four main types of data mining tasks: association rule learning, clustering, classification, and regression.

- Data analytics: The various types of big data analytics are descriptive analytics, diagnostic analytics, predictive analytics, and prescriptive analytics.

- Data visualization: Data visualization is one of the four main types of big data technologies.

- AI-Powered Analytics

Artificial intelligence (AI) is the key to unlocking the power of data and gaining a competitive edge. AI systems can ingest and evaluate vast amounts of virtually any kind of customer-related information at lightning speed.

AI excels at finding insights and patterns in large datasets that humans just can't see. It also does this at scale and at speed. Today, AI-powered tools exist that will answer questions you ask about your website data. AI can also recommend actions based on opportunities its seeing in your analytics.

Machine learning (ML) tools deliver the ability to automatically learn and improve from experience without being explicitly programmed. Within AI-powered analytics, ML algorithms are used to apply what has been learned from past data to new data to predict future outcomes.

[More to come ...]