The Next-Generation Edge-Cloud Ecosystem

- Overview

Cloud adoption has skyrocketed as companies seek computing and storage resources that can scale up and down in response to changing business needs. But even taking into account the cost and agility advantages of cloud computing, there is growing interest in another deployment model — edge computing, computing done at or near the data source. It can support new use cases, especially innovative artificial intelligence (AI) and machine learning (ML) applications that are critical to modern business success.

Fog computing is an extension of cloud computing, located between edge computing and the cloud. When edge computers send massive amounts of data to the cloud, fog nodes receive the data and analyze the important content.

After that, fog nodes transmit important data to the cloud for storage and delete unimportant data or keep it for further analysis. In this way, fog computing can save a lot of cloud space and quickly transmit important data.

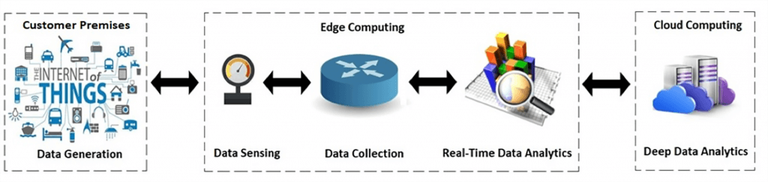

The promise of the edge comes down to data. Specifically, data needs to be collected, processed, and analyzed closest to where it is generated, whether on a factory floor, autonomous vehicles, or smart building systems.

The ability to run AI models directly on edge data eliminates the extra step of moving workloads to the cloud, reducing latency and cost. Most importantly, it is the key to unlocking real-time insights.

Please refer to the following for more information:

- Wikipedia: Cloud Computing

- Wikipedia: Edge Computing

- Edge and Fog Computing

Pushing computing, control, data storage, and processing to the cloud has been a key trend in the past decade. However, cloud technology alone is facing increasing limitations in meeting the computing and intelligent network requirements of many new systems and applications.

Local computing at the edge of the network and between connected devices is often necessary, for example, to meet strict latency requirements, integrate local multimedia context information in real time, reduce processing load and save terminal battery power, improve network reliability and resilience, and overcome bandwidth and cost limitations of long-distance communication.

The cloud is now "sinking" to the edge of the network and sometimes even spreading to end-user devices, forming a "fog". Fog computing is an intermediary between hardware and remote servers. It dictates which information should be sent to the server and which information can be processed locally. Therefore, the fog acts like an intelligent gateway that can reduce the load on the cloud, enabling more efficient data storage, processing, and analysis.

Fog computing distributes computing, data processing, and network services closer to end users along the cloud-to-things (C2T) continuum. Rather than centralizing data and computing in a few large clouds, fog computing envisions deploying many fog systems close to end users, or where computing and intelligent networking can best meet user needs.

Fog computing and networking propose a new architectural vision in which distributed edge devices and user devices collaborate with each other and with the cloud to perform computing, control, networking, and data management tasks.

- From Data Center, To Cloud, To Edge

The general IT trend has sent the computing power to the cloud but now it needs to swing back again and put more focus on the edge. During the last decades, we have seen how data is migrating, first from on-premises to cloud data centers and now, from cloud to the “edge” points closer to the source, where it’s being generated.

Edge computing is the next big wave of technology architecture in information management, moving processing power away from centralized and cloud data centers and closer to the origins of physical data. Edge computing is a distributed computing paradigm that brings computation and data storage closer to the location of the device.

Edge computing originated from Content Delivery Networks (CDNs). Now, companies use virtualization to extend the capabilities. Edge computing allows processing to be performed locally at multiple decision points for the purpose of reducing network traffic.

There’s a misconception that edge computing will replace the Cloud. On the contrary, it functions in conjunction with the Cloud. Big data will always be operated on the Cloud. However, instant data that is generated by the users and relates only to the users can be computed and operated on the edge.

The goal of moving closer to the edge - that is, within miles of the customer premises - is to boost the performance of the network enhance the reliability of services and reduce the cost of moving data computation to distant servers, thereby mitigating bandwidth and latency issues. Next-generation applications focused on machine-to-machine interaction with concepts like Internet of Things (IoT), AI and Machine Learning will transition the focus to edge computing.

Organizations that rely heavily on data are increasingly likely to use cloud, fog, and edge computing infrastructures. These architectures allow organizations to take advantage of a variety of computing and data storage resources, including IoT.

In 2020, there were 30 billion IoT devices worldwide, and by 2025, this number will exceed 75 billion connected devices. All these devices will produce huge amounts of data that will have to be processed quickly and in a sustainable way.

To meet the growing demand for IoT solutions, fog/edge computing comes into action on a par with cloud computing.

- Where the Edge Meets the Cloud

As companies progress in their data-driven business, they need to create an IT environment that includes edge computing and cloud computing. Data collected and analyzed at the edge can initiate real-time responses to troubleshoot industrial equipment to prevent machines from shutting down or redirect autonomous vehicles to dangerous locations.

At the same time, equipment data from that machine or vehicle can be sent to the cloud and aggregated with other data for deeper analysis that drives better-informed decisions and future business strategies.

Connectivity has reached the point where it's at the baseline, and it's fueling this idea of an intelligent edge. Intelligence starts at the sensing level at the edge and spans the networked systems of systems, eventually reaching the cloud. We treat it as a continuum.

- Edge Computing, Multi-access Edge Computing

Edge computing is a computing paradigm where computation is performed mostly on power-constrained devices with limited processing capability, or on-premise datacenters. Edge systems only loosely depend on large-scale cloud computing resources as needed (i.e., via computational offloading). In the edge computing context, it spans from the low-power Internet of Things (IoT) devices to mobile computing devices like smartphones, all the way up to high-performance edge servers for various application domains like autonomous cars and robotics.

Edge computing refers to computing happening at the edge of a network. Various access points define the network edge, hence the name for its architectural standard, Multi-access Edge Computing (MEC). Edge access points include cell phone towers, routers, Wi-Fi, and local data centers. Edge computing in telecom, often referred to as Mobile Edge Computing (or Multi-access Edge Computing), provides execution resources (compute and storage) for applications with networking close to the end users, typically within or at the boundary of operator networks.

The acronym MEC is used interchangeably to stand for Mobile Edge Computing or Multi-access Edge Computing. In September 2017, the European Telecommunications Standards Institute (ETSI) Industry Specification Group (ISG) officially changed its name from "Mobile Edge Computing" to "Multi-access Edge Computing". MEC characteristics include: proximity, ultra-low latency, high bandwidth, and virtualization.

- The Need For Edge Computing

The growth of the wireless industry and new technology implementations over the past two decades has seen a rapid migration from premises data centers to cloud severs. However, with the increasing number of Internet of Things (IoT) applications and servers, performing computation at either data centers or cloud servers may not be an efficient approach.

Cloud computing requires significant bandwidth to move the data from the customer premises to the cloud and back further increasing latency with stringent latency requirements for IoT applications and devices requiring real-time computation, the computing capabilities need to be at the edge - closer to the source of data generation.

Edge devices can include many different things, such as an IoT sensor, an employee’s notebook computer, their latest smartphone, the security camera or even the Internet-connected microwave oven in the office break room. Edge gateways themselves are considered edge devices within an edge-computing infrastructure.

The edge gateway is the core element in edge/fog computing. As the name suggests, it provides gateway functions - it connects sensors/nodes at one end, provides one or multiple local function, and extends bi-directional communications to the cloud.

- The Benefits of Edge Computing

Edge computing can be placed at enterprise premises, for example inside factory buildings, in homes and vehicles, including trains, planes and private cars. The edge infrastructure can be managed or hosted by communication service providers or other types of service providers. Several use cases require various applications to deployed at different sites. In such scenarios a distributed cloud is useful which can be seen as an execution environment for applications over multiple sites, including connectivity managed as one solution.

With edge computing, each intelligent device - including smartphones, drones, sensors, robots and autonomous cars - shifts some of the data processing from the cloud to the edge. The cloud will continue to be used to manage IoT devices and to analyze large datasets in use cases where immediate action is not imperative.

The benefits of edge computing manifest in these areas:

- Latency: moving data computing to the edge reduces latency.

- Bandwidth: pushing processing to edge devices, instead of streaming data to the cloud for processing, decreases the need for high bandwidth while increasing response times.

- Security: from a certain perspective, edge computing provides better security because data does not traverse over a network, instead staying close to the edge devices where it is generated.

- Going Beyond Edge Computing with Distributed Cloud

Distributed cloud computing refers to having computation, storage, and networking in a micro-cloud located outside the centralized cloud. It generalizes the cloud computing model to position, process, and serve data and applications from geographically distributed sites to meet requirements for performance, redundancy and regulations. Examples of a distributed cloud include both fog computing and edge computing. Establishing a distributed cloud situates computing closer to the end user, providing decreased latency and opportunities for increased security.

Edge computing is a solution where data is processed as close as possible to the place where it is generated. Applications that can benefit from edge computing are those where low latency and high throughput are critical, or where it is too expensive to send the data back to a distant cloud for processing. Other ways edge computing offers benefits includes cases where the transport network is bandwidth constrained or unreliable, or the data is too sensitive to be sent over public networks, even if encrypted.

Therefore, edge computing is not a different computing paradigm but an extension of distributed cloud computing. The two models can be reconciled by considering edge computing resources as a “micro” cloud data center, with the edge storage and computing resources connected to larger cloud data centers for big data analysis and bulk storage.

- Creating the Next-Generation Edge-Cloud Ecosystem

Distributed cloud computing expands the traditional, large data center-based cloud model to a set of distributed cloud infrastructure components that are geographically dispersed.

Distributed cloud computing continues to offer on-demand scaling of computing and storage while moving it closer to where these are needed for improved performance. Edge computing is a complementary aspect of distributed cloud computing, and represents the farthest end of a distributed cloud architecture.

Edge computing has great potential to help communication service providers improve content delivery, enable extreme low-latency use cases and meet stringent legal requirements on data security and privacy. To succeed, they need to deliver solutions that can host different kinds of platforms and provide a high level of flexibility for application developers.

- Edge AI

Edge AI means that AI software algorithms are processed locally on a hardware device. The algorithms are using data (sensor data or signals) that are created on the device. A device using Edge AI software does not need to be connected in order to work properly, it can process data and take decisions independently without a connection.

AI relies heavily on data transmission and computation of complex machine learning algorithms. Edge computing sets up a new age computing paradigm that moves AI and ML to where the data generation and computation actually take place: the network’s edge. The amalgamation of both edge computing and AI gave birth to a new frontier: Edge AI.

Edge AI allows faster computing and insights, better data security, and efficient control over continuous operation. As a result, it can enhance the performance of AI-enabled applications and keep the operating costs down. Edge AI can also assist AI in overcoming the technological challenges associated with it.

Edge AI facilitates ML, autonomous application of DL models, and advanced algorithms on the Internet of Things (IoT) devices itself, away from cloud services.

- Building Edge Service Environment in Hospitals

Many organizations are migrating their legacy applications to containerized applications and cloud service environments, often located in remote data centers. Edge technologies are on the rise looking for native edge service environments.

For example, building edge infrastructure distributed across academic hospitals is a concrete commitment. Hospitals need to maintain data on-site, but in order to train artificial intelligence models that can help detect and treat complex diseases such as cancer, tumors and cardiovascular disease, they need huge data sets beyond their own data.

Enable medical experts and researchers to collaborate effectively across diverse and distributed datasets, and make significant progress in cardiovascular diseases, cancer, genetic diseases, kidney diseases, etc. by building distributed, federated and highly secure datasets across Hospital infrastructure.

Creating an affordable infrastructure that would allow medical experts to use data from other hospitals to train their models without transferring or disclosing the data outside of the hospital is necessary.